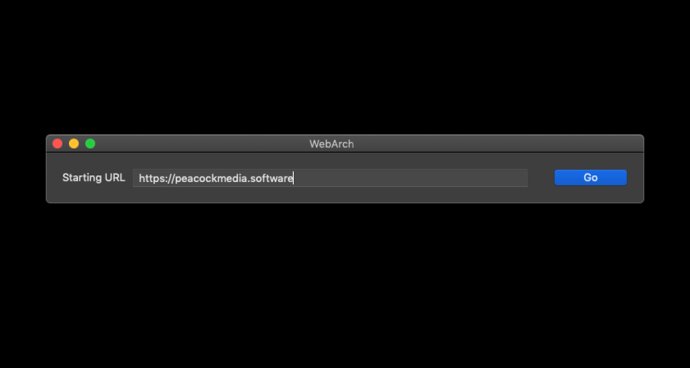

This software allows users to crawl a website and save all files locally in a user-friendly interface. It is a locally-run service, not cloud-based, ensuring user data ownership. Users can choose to preserve all files or process them for a browseable local copy.

In addition to a straightforward user experience, our software also boasts robust crawl settings. With options to limit the rate of website access, blacklist or whitelist certain URLs, and even spoof the user-agent string, you'll have everything you need to customize your crawler.

One of the biggest benefits of our software is that it runs locally, rather than as a cloud service. This means that you own all of your own data, and can maintain complete control over it.

When it comes to file preservation, our software also gives you a few different options. You can choose to preserve all files exactly as they were fetched under their original filenames. Alternatively, you can process the files so that they're more easily browsable within your local copy.

Overall, our website crawler software is a powerful, customizable tool that's perfect for anyone looking to save and analyze website data.

Version 0.5.0:

Some improvements which help to avoid missing resources

Now built to run natively on Intel and Apple Silicon Macs

Inherits rece improvements to the Integrity crawling engine